Class imbalance is a prevalent issue in various fields of study, particularly in machine learning and data analysis. It occurs when the distribution of classes in a dataset is greatly imbalanced, meaning that one class significantly outweighs the others in terms of the number of instances.

This class imbalance poses a challenge for many machine learning algorithms as they tend to favour the majority class, leading to biased predictions and inaccurate models.

Understanding and addressing the issue of class imbalance is crucial as it can have a significant impact on the performance and reliability of machine learning models.

When faced with imbalanced datasets, conventional algorithms may struggle to properly identify and classify instances from the minority class. Consequently, this can adversely affect the results and hinder the accuracy of the predictions made by such models.

In order to develop robust and effective algorithms, it is important to first identify and explore the underlying reasons and implications of class imbalance. This will enable researchers and practitioners to devise suitable strategies and techniques to mitigate the biases introduced by imbalanced datasets.

The problem in class imbalance

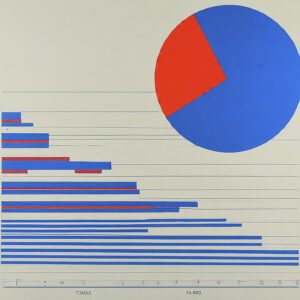

Class imbalance refers to a situation when the distribution of classes in a dataset is highly skewed, meaning that one class is significantly more prevalent than others. This can pose several challenges and limitations to the accuracy and effectiveness of predictive models.

The presence of class imbalance can lead to biased outcomes and reduced predictive performance.

When one class dominates the dataset, the model tends to prioritize that class and may overlook the minority class. As a result, the predictive accuracy for the minority class tends to be much lower compared to the majority class.

This imbalance can create difficulties in accurately understanding patterns and making informed decisions, particularly for applications where the minority class is of particular interest, such as fraud detection or rare disease diagnosis.

Addressing class imbalance is crucial to ensure fair and reliable predictions, as well as to improve the overall performance of predictive models.

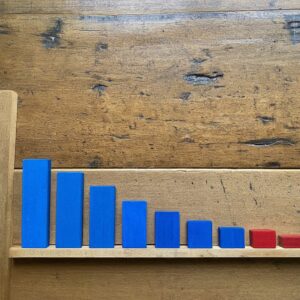

Example dataset for class imbalance

To better understand the effects of class imbalance in machine learning, let’s consider an example dataset.

Suppose we have a dataset of credit card transactions with the goal of detecting fraudulent transactions. In this dataset, the majority class would represent legitimate transactions, while the minority class would represent fraudulent transactions.

Due to the nature of credit card fraud, fraudulent transactions are relatively rare compared to legitimate ones. This scenario results in a class imbalance problem, where machine learning algorithms may struggle to accurately classify fraudulent transactions due to the limited amount of available data in the minority class.

Understanding such an example dataset for class imbalance helps us grasp the nuances and challenges associated with effectively addressing this issue.

Learn what is Lemmatization in NLP

How to find Class imbalance problem

Resampling

One of the first steps in addressing the issue of class imbalance in datasets is to identify whether it exists in the first place.

Several key indicators can help detect the presence of class imbalance. First, examining the distribution of the target variable can provide crucial insights.

If one class significantly dominates the distribution, while the other classes are underrepresented, then an imbalance is likely present. Additionally, calculating the class imbalance ratio by dividing the number of instances in the minority class by the number of instances in the majority class can help quantify the level of imbalance.

A large ratio indicates a greater imbalance in the dataset.

Furthermore, analyzing the accuracy of a classifier on the imbalanced dataset can signal the presence of class imbalance.

If the classifier performs considerably better on the majority class compared to the minority class, it suggests that the classifier has learned to prioritize the majority class due to its prevalence.

This is a common effect of class imbalance, as the minority class tends to be overshadowed and disproportionately misclassified.

By carefully evaluating these indicators and recognizing the existence of class imbalance, researchers can then employ appropriate resampling techniques to rectify the problem.

Random under sampling

Random under sampling is a widely used technique in addressing the problem of class imbalance in datasets.

As the name suggests, this method involves randomly selecting a subset of the majority class samples to match the number of samples in the minority class.

By reducing the number of majority class samples, the dataset becomes more balanced, allowing the machine learning algorithm to give equal consideration to the minority class.

Implementing random under sampling requires careful consideration of the trade-offs involved.

On one hand, reducing the number of majority class samples can lead to a loss of information, potentially compromising the accuracy and generalizability of the model.

On the other hand, this technique can help mitigate the problem of overfitting, where the model becomes too specialized in predicting the majority class and fails to accurately classify the minority class.

It is important to strike a balance between reducing class imbalance and preserving the integrity of the dataset to ensure meaningful and reliable results.

Random over sampling

Random over sampling is a technique used to address the problem of class imbalance in a dataset.

This technique aims to increase the number of minority class samples by randomly duplicating them.

By doing so, the minority class is given more weight during model training, ensuring a better representation and reducing the impact of the class imbalance on the model’s performance.

Applying random over sampling involves duplicating random instances from the minority class until it reaches a similar number of instances as the majority class.

This helps to create a balanced dataset and allows the model to learn from a more representative sample.

However, it is important to note that random over sampling may lead to overfitting, as it artificially inflates the number of instances in the minority class.

Therefore, it is essential to carefully evaluate the consequences of this technique and consider other approaches if necessary.

Synthetic Minority Oversampling Technique

Synthetic Minority Oversampling Technique (SMOTE) is a well-known solution to address class imbalance in datasets.

By generating synthetic examples of the minority class, SMOTE aims to increase the representation of the minority class and achieve a more balanced dataset. This technique involves creating new instances by interpolating the feature space between existing minority class samples.

SMOTE works by randomly selecting a minority class instance and finding its k nearest neighbors.

From these neighbors, SMOTE generates new synthetic instances by randomly selecting a neighbor and creating a synthetic example along the line connecting the selected instance and its neighbor.

This process is repeated until the desired level of imbalance is achieved. By supplementing the minority class with synthetic examples, SMOTE helps the classifier to learn from a more representative dataset, improving its ability to accurately classify minority class instances.

NearMiss

NearMiss is a popular technique used to address the problem of class imbalance in datasets.

This resampling method works by selecting a subset of majority class instances that are considered “near” to the minority class instances.

The goal is to create a balanced dataset by undersampling the majority class. By discarding instances that are far from the minority class, NearMiss focuses on preserving the crucial patterns and characteristics of the minority class.

To implement NearMiss, three different versions are available: NearMiss-1, NearMiss-2, and NearMiss-3.

Each version approaches the selection of majority class instances differently. NearMiss-1 selects the majority class instances that are nearest to the mean of the minority class, effectively reducing the overlapping between the two classes.

NearMiss-2 selects the instances that are furthest from the nearest neighbors of the minority class, with the intention of removing noisy majority class instances.

NearMiss-3 takes a hybrid approach, combining the strategies of NearMiss-1 and NearMiss-2. This technique adds robustness to the resampling process and ensures better representation of the minority class in the final dataset.

Change the performance Metric

One approach to addressing the class imbalance problem in machine learning is to change the performance metric used to evaluate the model.

By default, most classification algorithms use accuracy as the performance metric, which measures the proportion of correctly classified instances.

However, in the case of imbalanced datasets, accuracy may not be the most informative measure.

Instead, other performance metrics such as precision, recall, and F1 score can be employed.

Precision measures the proportion of correctly predicted positive instances out of all predicted positive instances,

while recall measures the proportion of correctly predicted positive instances out of all actual positive instances.

The F1 score, which is the harmonic mean of precision and recall, provides a balanced measure of the model’s performance for both positive and negative instances.

These alternate metrics can provide a better understanding of how well the model performs in classifying the minority class, which is crucial in the context of imbalanced datasets.

Penalize Algorithm

When dealing with class imbalance, one approach that has shown promise is the use of penalize algorithms.

These algorithms work by applying a penalty to the misclassification of minority class instances, thereby encouraging the model to pay more attention to these instances during the training process.

By doing so, the algorithm aims to reduce the biased behavior of the model towards the majority class and improve its ability to accurately classify the minority class instances.

A common way of implementing penalize algorithms is by adjusting the weights assigned to each class during the training phase.

The weights can be modified such that the model assigns higher penalties for misclassifying instances of the minority class.

This encourages the algorithm to allocate more resources to correctly identifying these instances, resulting in a more balanced classification performance.

It’s important to note that the specific implementation of penalize algorithms may vary depending on the algorithm and the problem at hand.

Thus, careful experimentation and evaluation are necessary to determine the most effective penalty configuration for a given task.

Ensemble Methods for Class Imbalance

Ensemble methods are widely used in machine learning to improve the predictive performance of models by combining multiple base learners.

When dealing with class imbalance, ensemble methods can be particularly beneficial as they can help in handling the skewed distribution of classes.

By training multiple models on different subsets of the imbalanced data and then combining their predictions, ensemble methods can mitigate the impact of class imbalance and provide more robust and accurate results.

Popular ensemble methods such as Random Forest, AdaBoost, and Gradient Boosting are commonly used in addressing class imbalance.

These techniques work well with imbalanced datasets by giving more weight to minority class samples or adjusting the decision boundaries to better capture the minority class instances.

Through the ensemble of diverse models, these methods can effectively tackle the challenges posed by class imbalance and enhance the overall performance of machine learning algorithms.

Frequently asked Questions

What is Class Imbalance in Machine Learning?

Class imbalance in machine learning refers to the situation where the distribution of classes in the training dataset is skewed, with one class significantly outnumbering the other(s).

What are the challenges posed by Class Imbalance?

Class imbalance can lead to biased models, poor predictive performance, and difficulty in detecting the minority class.

How does Class Imbalance impact model performance?

Class imbalance can result in models that are biased towards the majority class, leading to poor performance in predicting the minority class.

What are some techniques to address Class Imbalance?

Techniques to address class imbalance include resampling methods, cost-sensitive learning, ensemble methods, and one-class classification approaches.

What are Resampling Methods for Handling Class Imbalance?

Resampling methods involve either oversampling the minority class, undersampling the majority class, or generating synthetic samples to balance the class distribution.

How does Cost-Sensitive Learning help in dealing with Class Imbalance?

Cost-sensitive learning assigns different costs to errors based on class imbalance, encouraging the model to focus on correctly predicting the minority class.

How do Ensemble Methods help with Class Imbalance?

Ensemble methods combine multiple models to improve predictive performance, making them effective in handling class imbalance by reducing bias towards the majority class.

What is the One-Class Classification Approach for Imbalanced Data?

The one-class classification approach involves training a model using only the majority class and then identifying outliers or anomalies as the minority class.

What are some Evaluation Metrics for Imbalanced Data?

Evaluation metrics for imbalanced data include precision, recall, F1 score, ROC-AUC, and PR-AUC, which provide a more comprehensive assessment of model performance than accuracy.

Can you provide examples of Case Studies on Handling Class Imbalance in Machine Learning?

Case studies on handling class imbalance in machine learning include using SMOTE for oversampling, adjusting class weights in algorithms, and utilizing ensemble methods to improve predictive performance on imbalanced datasets.

1 Comment